Developing AI Literacy With People Who Have Low Or No Digital Skills

Artificial intelligence offers a unique chance to level the playing field for those digitally excluded, but also risks deepening the digital divide. Our report explores how we can support 8.5 million UK adults lacking basic digital skills to safely engage with AI. Download the report or continue reading here.

Forewordback to top

AI offers a unique opportunity to level the playing field for those who are digitally excluded - making it easier and more accessible to engage online. It also presents huge risks - risks of deepening the digital divide between those who have the access, skills, confidence and support to benefit, and those who do not.

Successful AI adoption hinges on the whole of society's ability to navigate and understand this technology. 8.5 million people in the UK lack the basic digital skills to be able to do the digital basics. Without focused efforts, they will be left further behind as the technology continues to advance.

This important research has engaged with people who are digitally excluded and the community organisations supporting them. We've learned that introducing AI requires a careful approach when supporting people with low digital skills. AI can be overwhelming. People are fearful and anxious about AI and what it means for them and others.

Building foundational digital and media literacy skills is essential for understanding and engaging with AI - vital steps on a learning journey towards AI literacy. We need to recognise this, and ensure everyone can access the support they need, should they wish. Good literacy support is real, practical, and related to people’s everyday lives. It gives concrete examples of how AI can make life easier, save time and money, and open new possibilities.

What this research also highlights is that good literacy support depends on AI confidence in community organisations, libraries, and others involved in supporting digital, media, and AI literacy. We need to start by supporting people working in local communities to build their own understanding of AI. They are looking for support to understand available AI tools, use them safely, confidently, and share this knowledge with their communities.

At Good Things Foundation, thanks to the support of Accenture and other partners, we're building our AI offer, starting with a short video for those with low digital skills. Our priority now is to empower the National Digital Inclusion Network with access to understanding and support so that they can support others to be included in an age of AI.

Contextback to top

Good Things Foundation’s strategy evolved in 2022 to ensure we had the right ingredients in place to Fix the Digital Divide. We broadened our activities to focus on removing more of the barriers for digitally excluded people, working with and through thousands of community organisations who are members of the National Digital Inclusion Network.

Collectively, members of the National Digital Inclusion Network support hundreds of thousands of people each year. Their support helps build skills, confidence, and online access for managing health, finances, interests, and social connections. Network members provide local access to data, devices, and digital skills support, drawing on nationally available resources delivered by Good Things Foundation - Learn My Way, the National Databank, and the National Device Bank.

As the advancement of AI continues at speed it poses a risk of further excluding digitally excluded people. We're working with partners to evolve our offer and enable the National Digital Inclusion Network to support more people with the skills and confidence they need to engage safely with AI.

In June 2024, we commissioned Magenta Research to understand our key audiences' needs for AI literacy support, test our approaches, and steer the development of our future offer.

This report summarises the main insights.

Research approachback to top

Our research took an iterative approach, involving a wide range of both community organisations and people supported with digital inclusion, in different locations and across a range of demographic groups. People using community organisations for support were at different stages of their digital skills journey; some were learning to use computers for the first time, whilst others had been receiving regular support and had developed more advanced digital skills.

Summary of key insights on developing AI literacyback to top

AI in contextback to top

1. Digital literacy and media literacy are precursors to AI literacy. People are more open to learn and less fearful of AI if they already have basic digital and critical skills.

2. Supporting people with AI will allow them to take advantage of opportunities as they arise and manage their risks. AI adoption is inevitable, and we risk leaving people further behind. Engaging with AI should be built into existing digital skills learning.

AI literacy support for people who are digitally excludedback to top

3. Awareness and understanding of AI is low. There is a widespread lack of awareness and understanding of AI. Community organisations are rarely asked questions about AI.

4. Negative media stories create a sense of fear and anxiety. Negative media stories instil a sense of fear and anxiety, particularly against a backdrop of limited knowledge and understanding.

5. Engagement with AI support is more likely if foundational digital skills are in place. Those who are learning basic skills (such as sending emails and browsing for information) feel that AI introduces an extra layer of complexity; they tend to feel overwhelmed by AI and its capabilities. Those with slightly higher levels of skills are more open to learn about AI and its applications.

How to deliver AI literacy support in community spacesback to top

6. AI literacy support needs to be centred around practical tasks and tailored to an individual’s needs and interests. Digital inclusion support needs to take a holistic approach that frames AI within the context of an individual's life. This helps to reduce fear so that AI can be seen as an opportunity rather than a threat.

7. Both the positives and negatives of AI should be communicated. Developing an AI offer that addresses both the positives and negatives of AI is seen as a core part of any learning programme. Fun and playful topics like image and music generation can help dispel fears and promote AI's benefits but should be balanced with support to understand risks associated with AI.

8. AI should be embedded as part of a wider digital skills journey. AI support should be introduced gradually as skills and confidence grow, not as a standalone. Pointing out where AI is already being used can help (e.g. the AI Overview at the top of some browser search results). Wraparound support from community organisations should also be included/

9. Support should be interactive. As with all digital inclusion support, giving people the opportunity to try AI tools themselves allows them to learn in a supported environment, ask questions, and discuss their experiences.

10. Support should be inclusive and flexible. AI literacy support, like all digital inclusion support, needs to respond to people’s wider needs, such as translation and a range of formats and styles.

Building an AI confident communityback to top

11. Community organisations need support to be confident with AI tools. As the AI landscape is evolving at speed, community organisations find it difficult to stay up-to-date on the latest developments. Staff are conscious they are looked to as ‘the experts’, and are concerned around providing support to others when they have gaps in their own knowledge.

12. Help community organisations to use AI to enhance their organisational practices. There are significant potential benefits for both an organisation's operations and the people they support. Information about available AI tools and how they can be used to support organisational activities would be welcomed.

Our learnings on supporting people with AI literacyback to top

AI and its role in digital inclusion back to top

1. Digital literacy and media literacy are precursors to AI literacy

Digital inclusion, digital literacy, AI literacy and media literacy are all complex, multi-faceted concepts which are strongly interlinked.

People who are digitally excluded are less likely to have digital, media or AI literacy, and face disadvantages in developing these. Those most at risk of digital exclusion (older adults, people with no qualifications, disabled people, those who speak English as a second language) are at greatest risk of being left behind as AI adoption grows.

Supporting AI literacy needs to build on established foundational skills of digital literacy and media literacy. It will have limited success if approached in isolation.

2. Supporting people with AI will allow them to take advantage of opportunities as they arise and manage their risks

Community organisations consider AI support to be a vital asset for people who are digitally excluded. They recognise that, as AI adoption grows, a lack of knowledge about AI could widen the digital divide and exacerbate inequalities. AI also offers opportunities to make lives easier and create a more level playing field by helping individuals to improve their skills in ways that have not previously been possible, e.g. drafting letters or summarising information in a simple way, or translating between languages.

‘The digital divide is worrying but if handled properly, AI could help mitigate the digital divide. The tools that are potentially available through it could help with language barriers, people with visual and hearing impairments.’ - Community partner, London

‘It’s [AI] is everywhere. It’s not going to go away. We’re going to have to embrace it and talk to people about it. It could present an opportunity for equality where people at a disadvantage will have access to the same level playing field through AI’. - Community partner, Manchester

AI literacy support for people who are digitally excludedback to top

3. Awareness and understanding of AI is low

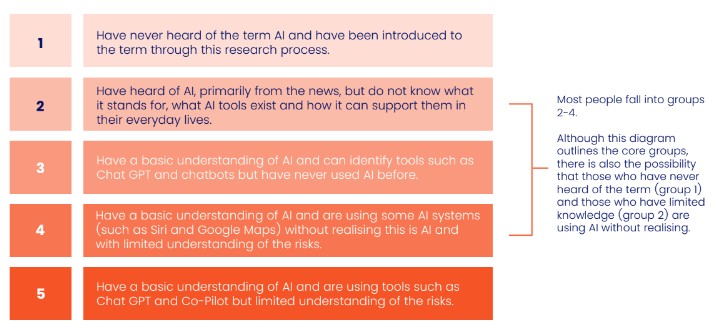

People who are digitally excluded lack general awareness and understanding of AI - especially in older age groups. Research identified five groups based on their knowledge and use of AI tools.

Community organisations are rarely asked questions about AI from the people they support but when they are, questions tend to be very basic.

‘No-one has sat me down and said 'can you chat to me about AI and show me how to use it’. - Community partner, Bradford

‘These are people who are digitally excluded at the best of times. People are asking very general questions like what does AI stand for? It’s as basic as that.’ - Community partner, London

4. Negative media stories create a sense of fear and anxiety

Conversation around AI among the people supported by community organisations is largely negative, characterised by fear and anxiety. Many people are exposed to negative media stories about AI, leading to concerns about privacy, security, job losses, and the unreliability of AI tools. These are all legitimate and important concerns, widely shared, but lack of knowledge and understanding of AI, its uses and benefits, can leave people fearful, disempowered, and distrustful of using online services more generally.

‘I do feel we're being manoeuvred by, you know, this thing which has our face and a lot of people are making a lot of money out of these things like Musk…and it's quite frightening.’ - Person supported, community partner, London

‘Our beneficiaries have a big fear of bureaucracy and mainstream services. They are asking questions like ‘Will someone be tracking me? Will somebody be reading what I’m writing? If I enter these details, will it affect my benefits?’ It’s the same with AI. People that have heard of it don’t trust it.’ - Community partner, Manchester

5. It is easier to engage people with AI support if they have foundational digital skills

People who are digitally excluded face barriers to being online, and therefore accessing AI. These include affordability of devices, internet access, and not having the skills and or confidence to be able to navigate the online environment safely. People who are learning basic skills (e.g. sending emails, browsing for information) feel that AI adds an extra layer of complexity, and tend to feel overwhelmed by AI and its capabilities. The day to day realities that drive people to seek support with digital inclusion (such as applying for Universal Credit or making appointments online), mean that AI is often seen as another challenge, so people may be less receptive to learning more about it.

Whilst those with higher digital skills may still experience fear and feeling overwhelmed, they are more receptive to and curious about AI. This is supported by wider research which suggests that those with higher digital literacy levels are more likely to be more confident about AI than those with lower levels of digital literacy.

‘They see it [AI] as another barrier. Applying for Universal Credit online, for example, people already struggle with these kinds of issues. Adding AI to the mix complicates things.’ - Community partner, Manchester

‘Some people are only just mastering how to send an email. They have other things which they consider more important on their list. There are mixed views towards AI.’ - Community partner, London

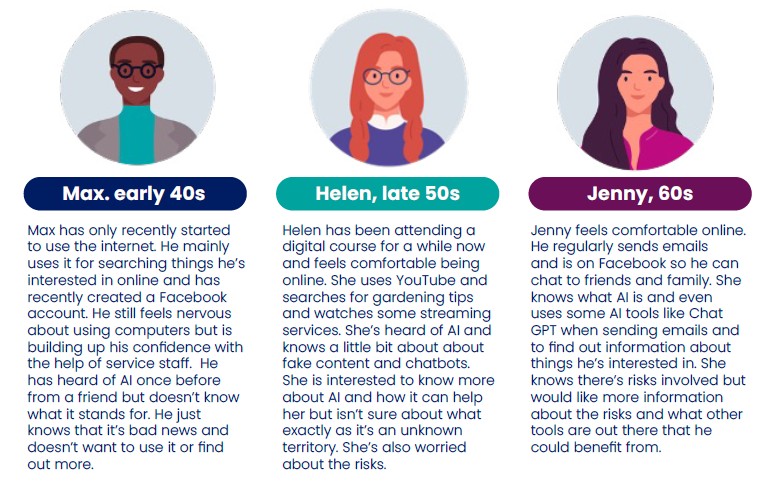

Please note: These personas are hypothetical examples, based on research with people supported by the hubs.

How to deliver AI literacy support in community spacesback to top

6. AI support needs to be centred around practical tasks and tailored to an individual’s needs and interests

Those who are curious about AI want to know how it can help with daily tasks. Examples include drafting letters, CVs, emails, translating between English and another language, using chatbots when doing online banking, and searching for information.

For those who are less curious, community organisations can use their expertise in understanding people’s needs, and introduce AI as a tool to address those needs. Digital inclusion support needs to take a holistic approach that frames AI within the context of an individual's life. This helps to reduce fear and ensure AI is seen as an opportunity rather than a threat.

‘If you say ‘come learn about AI’ you get very limited uptake. Instead, it's about offering AI as a solution to the problem they are facing.’ - Community partner, London

‘Beneficiaries will have questions around 'how will this improve my life?'. You need to look at it from the perspective of beneficiaries and offer an easy-to-understand explanation on how the benefits of AI will make their life more positive.’ - Community partner, London

'It needs a holistic approach. You need to understand the client - their background, what they are going through, in order for them to develop some confidence around it [AI].' - Community partner, Milton Keynes

7. Both the positives and negatives of AI should be communicated

Developing an AI offer that addresses both the positives and negatives of AI is seen as a core part of any learning programme. Fun and playful topics like image and music generation can help dispel fears and promote AI's benefits.

However the risks of AI cannot be overlooked. We heard examples of individuals using Generative AI tools without verifying sources or checking outputs. Those who are comfortable using the internet want to learn about AI risks and how to mitigate them, but they can be unclear about the specific risks they want to learn about.

‘I think that if AI stays focused on that [bad news/risks] we kind of missed a real opportunity where actually AI could be really useful to a lot of people.’ - Community partner, London

‘What's interesting though is, the thing with AI is you need to check it, and beneficiaries are sending emails with mistakes and saying things they don't really mean.’ - Community partner, Sheffield

'Sometimes we'll see CV submissions and they've still got the [OpenAI’s] ChatGPT prompts in there so you can see what they asked.’ - Community partner, Milton Keynes

8. AI should be embedded as part of a wider digital skills journey

AI support should be introduced gradually to avoid any overwhelm or disengagement and should not be introduced on its own without support at the beginning of a learning journey. Highlighting where people are already making use of AI - but might not realise it (e.g. online searches and tools like Google Translate and Google Maps) - is helpful. Wraparound support, such as having upfront conversations to understand someone’s needs and provide context for using AI, is also extremely important. Providing support and guidance throughout the learning journey will help identify opportunities for further exploration as an individual becomes more knowledgeable about AI.

'I’d need to have a conversation before showing them this [introduction to AI video]. It wouldn’t make as much sense otherwise. They might not know about things like chatbots. You’d want to find out their understanding and then frame the video a little bit, providing some context so it makes more sense to them and then talking after telling them how they could use it in their life.’ - Community partner, Manchester

9. AI literacy support should be interactive

As with all digital inclusion support, giving people the opportunity to try AI tools themselves allows them to learn in a supported environment, ask questions, and discuss their experiences. Interactive modules on Learn My Way, like testing out different prompts, are more likely to help people build their understanding of AI compared to reading or watching videos about AI.

10. Support should be inclusive and flexible

Many people who need digital inclusion support have a wide range of other needs. AI literacy support - like all digital inclusion support - needs to adapt and incorporate these other needs. English for Speakers of Other Languages (ESOL) learners are often identified as a particularly vulnerable group due to language barriers and wider circumstances. Measures like translating resources using AI enabled tools that they are already familiar with (e.g. Google Translate) and adding subtitles can help.

Recognising that people learn in different ways and tailoring resources to various learning styles (including audio, video, and written content) will enable learning. Visual content is considered especially helpful for ESOL learners.

‘15 out of 20 people I support wouldn’t be able to access this [video]. It would need accurate translation. We support a lot of people from Kurdish communities who don’t have written language so a transcription would be nothing.’ - Community partner, Manchester

Building an AI confident community offerback to top

11. Staff in community organisations need support to be confident with AI tools

Community organisations who support people with digital inclusion are aware of AI but have mixed knowledge and confidence. Building their knowledge and understanding on the overlaps between digital literacy, media literacy and AI literacy will help them build an effective programme of support. Some use Generative AI tools, but they recognise the vast number of tools available and their need to learn more about using them effectively, including writing prompts and understanding data and security risks.

As the AI landscape is evolving at speed, community organisations find it difficult to stay up-to-date on the latest developments. They are conscious they are looked to as ‘the experts’ and are concerned around providing AI support to people with gaps in their own knowledge. While they believe people need to know about AI risks, they don't feel confident teaching them due to their own uncertainties and gaps in knowledge.

To help them to continue to develop they would like training, ideally face to face, on how to lead discussions and run sessions.

‘We feel like we need to be the experts and we’re still learning ourselves.’ - Community partner, London

‘I would like to gain more knowledge of AI. I don’t know how it’s going to impact things in the future.’ - Community partner, Bolton

‘There needs to be much more emphasis on supporting hubs on a face-to-face basis.’ - Community partner, Birmingham

12. Help community organisations to use AI to improve and enhance their organisational practices

Community organisations recognise AI's potential benefits for both their own operations and the people they support. They welcome information about available AI tools and how they can be used. Areas where they know support would help include:

- Bid writing, which has already been used by some community organisations but they would appreciate support on addressing potential risks.

- General governance tasks like writing risk assessments but some hesitate due to concerns about meeting legal requirements.

- Writing prompts more effectively.

- Learning about design tools with embedded AI, like Canva, to create materials without hiring a designer.

Key recommendations for community organisations designing a programme of AI supportback to top

- Only introduce AI support to those already on their online journey. Those being supported by community organisations need to feel comfortable being online and navigating the internet before being introduced to AI support, otherwise it will lead to overwhelm and disengagement.

- Centre support around practical tasks. Communicate how AI can make people's lives easier and how it can support them in completing everyday tasks. For example, writing CVs, letter templates, and language translation.

- Develop resources which communicate both the positives and negatives of AI. It is important to communicate on the practical benefits of AI, in terms of how tools can make lives easier, as well as the risks of AI.

- Embed AI support as part of existing digital skills learning. Gently introduce AI topics and tools at appropriate points within digital skills journeys and ensure that AI support is tailored to the needs of individuals. For example:

- If they are learning about online banking, introduce the concept of chatbots and how they are used to answer common questions.

- If they are attending a course around job skills, introduce Generative AI tools for supporting with CVs and covering letters.

- If they are confident using emails, introduce writing assistant tools such as Grammarly and Quilbot.

- Provide the option to interact with AI tools as part of the learning process to enhance their confidence within a safe environment. Examples include:

- Using chatbots for everyday tasks with the support of a staff member.

- Using Microsoft Co-Pilot or ChatGPT to find information and check the quality of the information.

- Writing emails with the support of Grammarly or ChatGPT.

- Create resources which cater to a range of different needs. Use different formats, including video, print, independent and group learning, online and face-to-face learning. Translate content into different languages. Include subtitles on videos.

- Create guidance for hubs for any video content. For example, provide instructions on how to include subtitles on videos, how to change the language of subtitles, and how to slow the pace of the audio, in line with an individual’s needs.

Key recommendations for funders, commissioners, and others working in partnership with hubsback to top

E.g. community centres, libraries, adult learning providers:

- Provide training around a range of topics to equip community organisations (staff, volunteers) with the AI knowledge, skills and confidence they need - for themselves, and to support those they serve in communities. Key topics include:

- AI tools available and how they can be used;

- Risks of using AI (e.g. relating to copyright infringement, AI bias) and

- How to write effective prompts.

- Offer additional resources for hubs on ways AI could support them in managing their organisation, for example, around using AI to support bid writing, general governance, and for social media content and marketing.

- Create a set of resources for hubs to assist them in delivering AI content with wrap-around support. Content should consider:

- Avoid any jargon (with examples of how AI can be described in an accessible, easy to digest way).

- The importance of supporting people every step of the way, including questions that can be asked after learning about AI.

- A list of resources that can be used for more information on AI.

How we're responding to these learningsback to top

Introduction to Artificial Intelligence

This new topic on Learn My Way, our free digital skills learning platform, is a helpful resource for those already on their digital skills journey, gently introducing them to AI and providing examples of where they may already be interacting with AI.

The key insights of this research have informed the ongoing development of our offer which will focus on guidance and training for community partners and identifying where it’s appropriate to weave AI learning into existing topics on Learn My Way.

We’ve devised a rolling programme of training and an accompanying resource collection which aims to act as a launch pad for community organisations. It will support them to start to use AI in their operations and develop their own knowledge and skills in preparation for embedding AI literacy learning into their digital skills offers. It will help hub staff to identify the benefits, considerations and potential risks of using AI and offer ways to mitigate these. It will also help them to assess governance options, teach them how to write effective prompts and how to assess AI tools / features that could be helpful in the running of an organisation.

In addition, we'll begin to surface more AI focussed learning in our existing Learn My Way offer, bringing transparency to where AI systems may already be present in some of the things people already do online.